Benchmark models using OpenAI-compatible APIs

Recently, I wrote about why you should write your own benchmarks for language models to see how they work for your app. I also shared a ready-to-use Jupyter Notebook that allows you to evaluate language models on Ollama. I’ve just published a new version of the notebook which now supports any language model host that exposes OpenAI-compatible APIs.

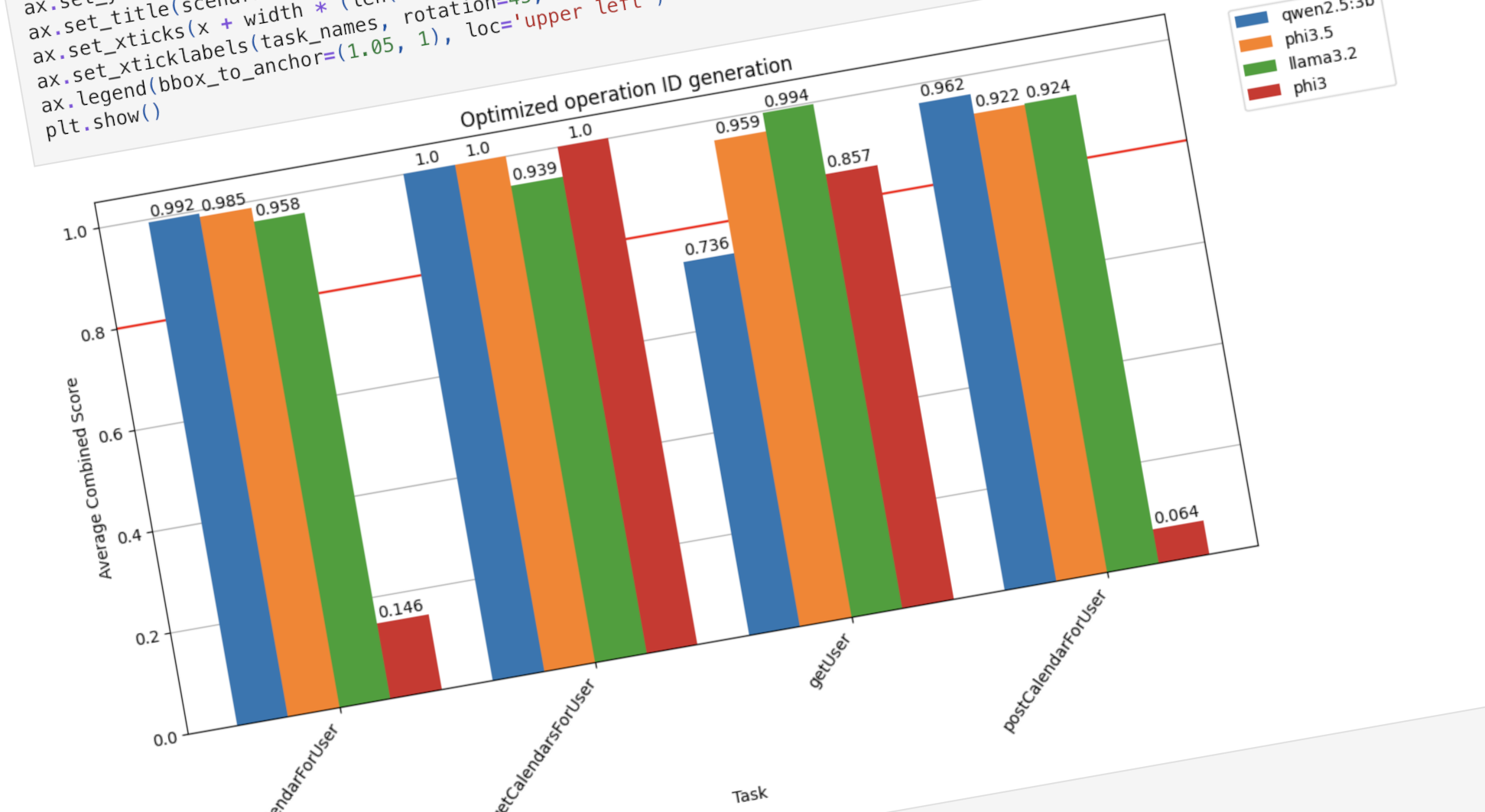

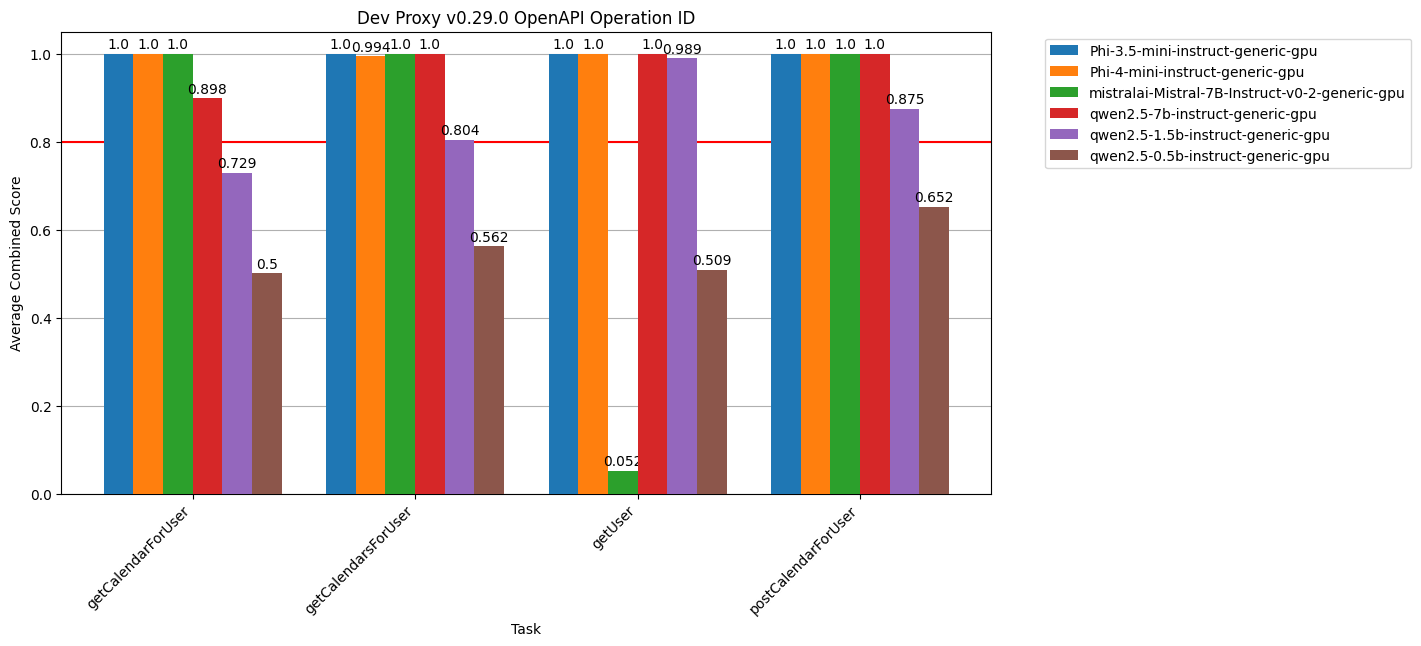

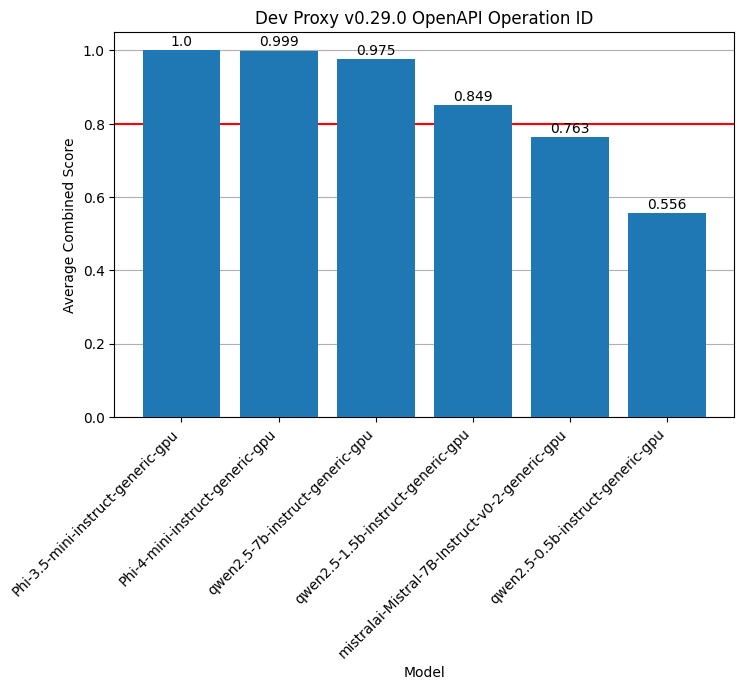

Like the previous version, the new notebook shows you how well your select language models perform for each scenario, and overall.

What’s changed, is that you can now test models running not just on Ollama but on any host that exposes OpenAI-compatible APIs, be it Foundry Local, Ollama, Azure AI Foundry, or OpenAI. When selecting the models to test, you can now specify the language model host, and an API key if you need one:

# Foundry Local

client = OpenAI(

api_key="local",

base_url="http://localhost:5272/v1"

)

models = ["qwen2.5-0.5b-instruct-generic-gpu", "qwen2.5-1.5b-instruct-generic-gpu", "qwen2.5-7b-instruct-generic-gpu", "Phi-4-mini-instruct-generic-gpu", "Phi-3.5-mini-instruct-generic-gpu", "mistralai-Mistral-7B-Instruct-v0-2-generic-gpu"]By default, the notebook includes a configuration for Foundry Local and Ollama.

Another thing that I’ve changed is storing prompts in Prompty files. Using Prompty makes it convenient to separate the prompts from the notebook’s code. It’s also easy for you to quickly run a prompt and see the result of any changes you could make. I suggest you try it for yourself to see how easy it is.

If you’re using language models in your app, you should be testing them to choose the one that’s best for your scenario. This Jupyter Notebook helps you do that.