Calculate the number of language model tokens for a string

Here’s an easy way to calculate the number of language model tokens for a string.

When working with language models, you might need to know how many tokens are in a string. Often, you use this information to estimate the cost for running your application. You might also need to know the number of tokens to tell if your text fits into the context window of the language model you’re working with, or if you need to chunk it first.

You can roughly estimate the number of tokens by dividing the number of characters in your string by 4. This is however a very rough estimate. In reality, the actual number of tokens strongly depends on the language model you’re using. So for your calculations to be correct, you want to base them on the actual language model that you’re using.

To help you calculate the number of tokens in a string, I put together a Jupyter Notebook. It allows you to calculate the number of tokens for a string, a file, or all files in a folder.

After you clone the repo, start by restoring all dependencies. You can do this using uv, by running:

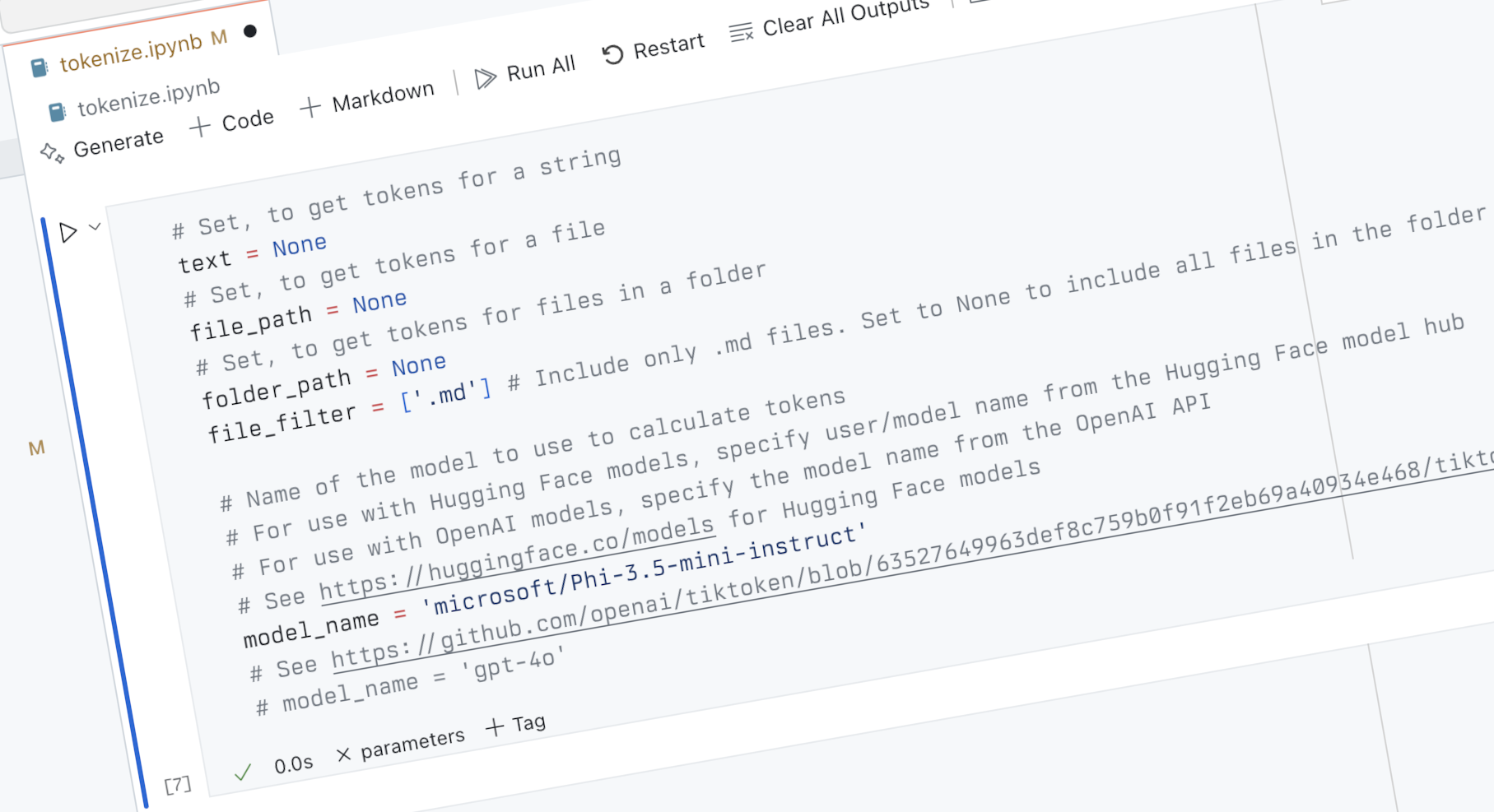

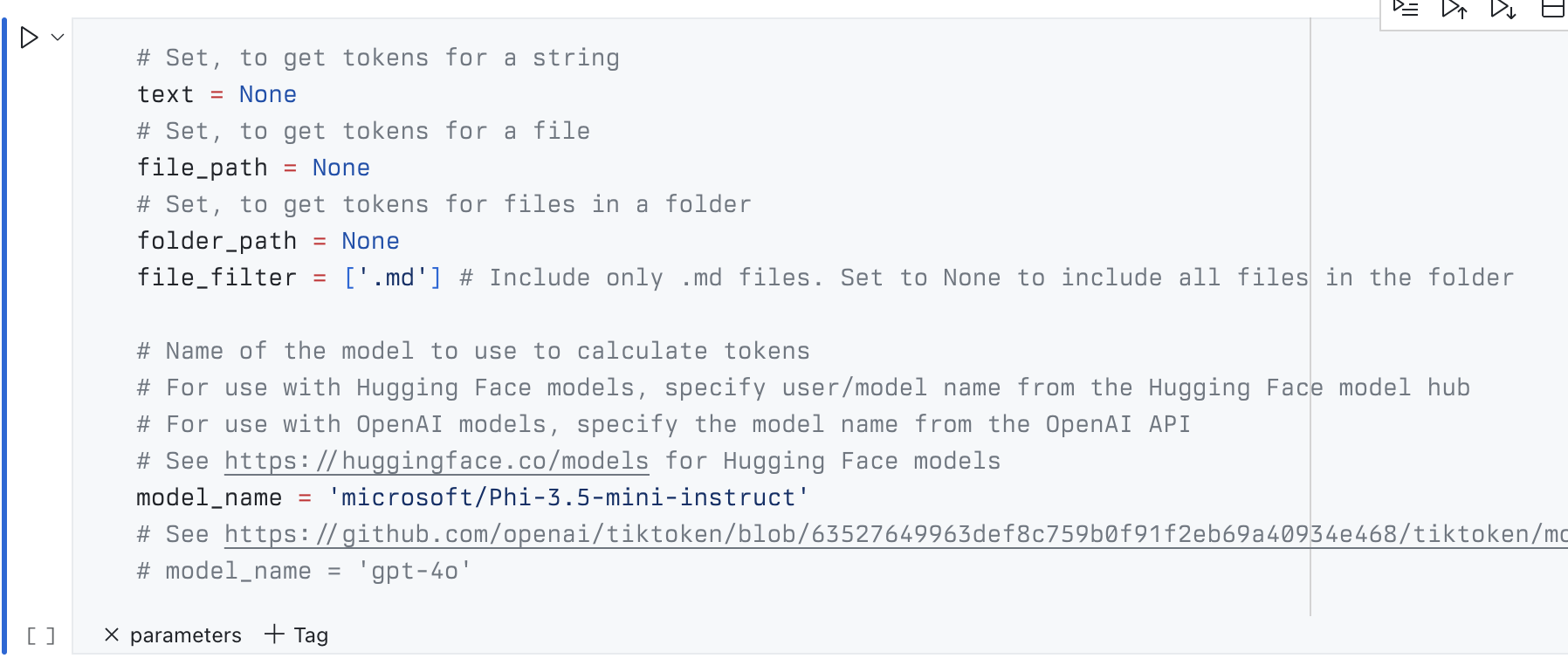

uv syncNext, open the notebook. Start with specifying the string/file/folder for which you want to calculate the number of tokens. Then, choose the language model you’re using in your application. You can either use a Hugging Face or OpenAI model. For Hugging Face models, specify the name in the user/model_name format. For OpenAI models, simply pass the name of the model. Here’s the list of supported models.

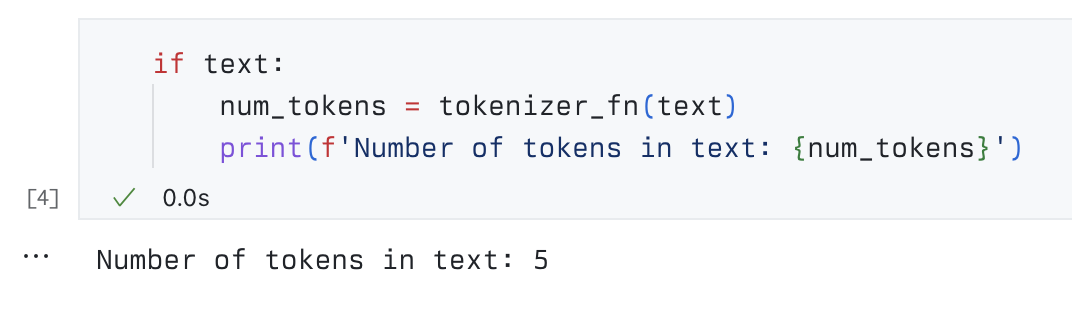

Finally, run all cells to see the number of tokens for your string/file/folder. Scroll down to the respective cell to see the output.

What’s great about calculating the number of tokens used in a string using a Jupyter Notebook is that it’s running on your machine. It doesn’t depend on an external service, it doesn’t incur any costs and allows you to calculate the number of tokens for your strings in a secure way for virtually any language model.

Try it and let me know what you think!