Language model benchmarks only tell half a story

When it comes to language models, we tend to look at benchmarks to decide which model is the best to use in our application. But benchmarks only tell half a story. Unless you’re building an all-purpose chat application, what you should be actually looking at is how well a model works for your application.

This article is based on a benchmark I put together to evaluate which of the language models available on Ollama is best suited for Dev Proxy. You can find the working prototype on GitHub. I’m working on a similar solution that supports OpenAI-compatible APIs and will publish it shortly.

When the best isn’t the best

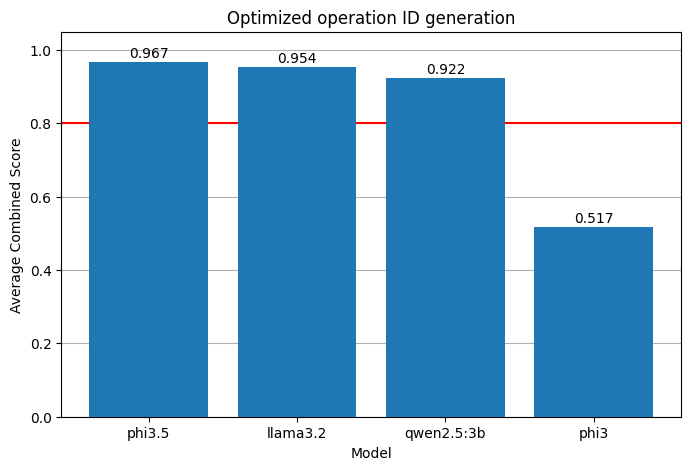

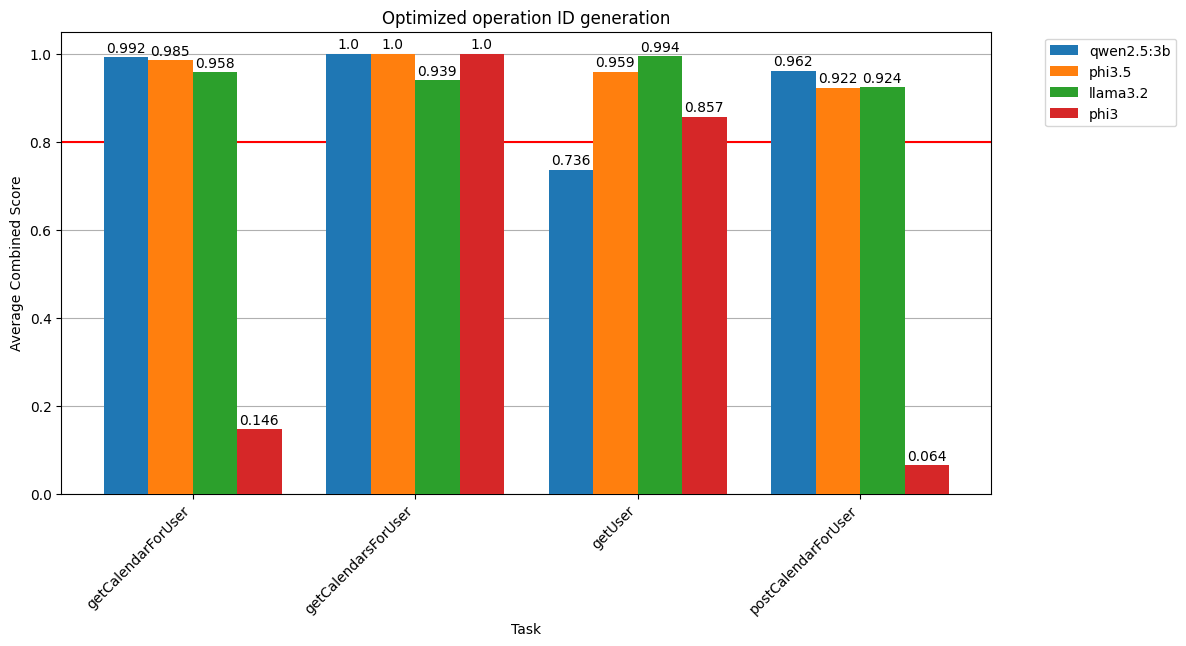

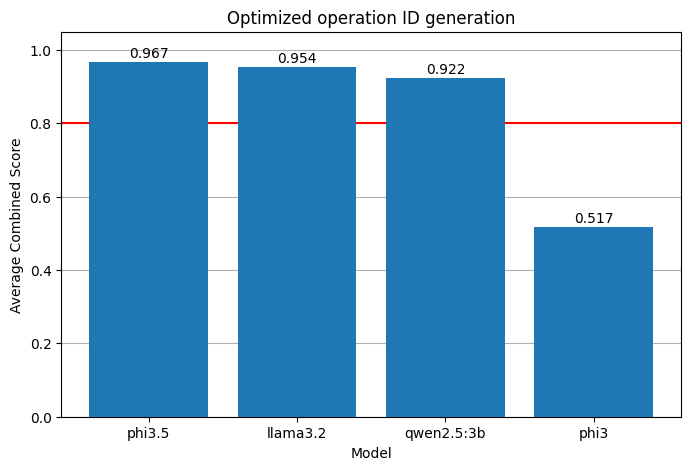

When we started looking at integrating local language models with Dev Proxy, to improve generating OpenAPI specs, we used Phi-3 on Ollama. It seemed like a reasonable choice: the model was pretty quick and capable, at least according to benchmarks. It turned out, that we couldn’t have picked a worse model for our needs.

The problem with language model benchmarks

We love benchmarks and comparisons. In the world of abundance, they offer an easy answer: which product is the best and which one should we choose. The thing is though, that a benchmark is based on some criteria. And unless you plan to use the product exactly based on these criteria, the benchmark is, well, useless. Sure, the results tell you something about the product, but it’s hardly the right information for you to choose.

So rather than relying on standard benchmarks, when it comes to choosing the best model for your application, what you actually need is to tell how well a model works for your scenario. You need your own benchmark.

Build your own language model benchmark

Evaluating language models is a science. There are many papers written on the subject, and if you’re building a language model for general use, you should definitely read them. But if you’re reading this article, probably all you care about is which model you should use in your application. You don’t care about most cases. You care about your case, and how the model works for you. To understand how well a model works for you, you should put together a benchmark.

Benchmark building blocks

Typically, a benchmark consists of the following elements:

- one or more test cases including the test scenario (prompt and parameters) and expected outcome

- evaluation criteria

- scoring system

Test cases represent how your application is using the language model. For each test case, you need to include one or more expected results. Evaluation criteria is how you’re going to verify in a repeatable way, that the actual result for a test case is like the one you expected. The scoring system allows you to quickly compare the results for each model you tested and tell which one works the best for you.

Benchmark for a language model

Since we’re talking about testing language models, there are a few more things that you need to consider while building a benchmark.

Language models are generative and their output is non-deterministic. If you run the same prompt twice, you’ll likely get two different answers. This means, that:

- To get a representative result, you need to run each test case several times.

- There are probably several correct answers to each test case.

- You can’t use a simple string comparison to evaluate the actual and expected output.

Evaluating language model output

To solve the first challenge, you need to run each test case and get an average score.

For the second part, you should provide several reference examples. When scoring language model’s output against these references, take the highest score.

The final problem is a bit trickier. The good news is, that it’s not a new problem, and there are several commonly used functions for scoring language model output, such as BLEU, ROUGE, BERT or edit distance. The challenging part is, that you’ll likely need a combination of these functions to determine the efficacy of a model for your scenario. To do that, you’ll define a weighted score, where each function gets assigned importance (weight) for the overall score.

Example evaluations for Dev Proxy scenarios

To put it all in practice, let’s look at two scenarios that we use in Dev Proxy.

Generate API operation ID

One way we use language models in Dev Proxy is to, given a request method and URL, generate the ID of the API operation when generating OpenAPI specs. For example, for a request like: POST https://graph.microsoft.com/users/{users-id}/calendars we want to get addUserCalendar or an equivalent.

For this case, we use the following functions and weights:

| Function | Weight |

|---|---|

| BERT-F | 0.45 |

| Edit distance | 0.10 |

| ROUGE-1 | 0.25 |

| ROUGE-L | 0.20 |

We assign the most weight to BERT-F because we want the generated ID to be semantically accurate. It would be nice if the generated ID matched one of our expected IDs (ROUGE-L), and the words overlap the better (ROUGE-1). Finally, we don’t want the ID to deviate too much from our examples (Edit distance).

You could have endless debate about each weight. It doesn’t really matter if you assign 0.45 or 0.43 to a function. What matters, is that you can explain what characteristics of the answer you care about and why, and that answers that are good in your eyes, get good scores.

Generate API operation description

Another scenario for which we use language models in Dev Proxy is to generate an operation’s description for use in an OpenAPI spec. For example, given a request GET https://api.contoso.com/users/{users-id}/calendars, we want to get Retrieve a user's calendars or something similar. For this case, we use the following evaluation criteria:

| Function | Weight |

|---|---|

| BERT-F | 0.45 |

| Edit distance | 0.10 |

| ROUGE-2 | 0.25 |

| ROUGE-L | 0.20 |

In this case, we replaced ROUGE-1 with ROUGE-2. When generating operation ID, we looked for overlap of the different tokes (eg. getUserById vs. getUserByIdentifier matches 3 out of 4 words). Since description is more elaborate, we decided to look at the matching word pairs than single words.

Again, this isn’t the way to evaluate a language model output. This is the way we chose to use for our scenario. I encourage you to experiment with different functions and weights to see what’s working for you.

A word on BERTScore

BERTScore is a great and convenient way to see if the generated text is semantically similar to the reference. The only trouble is that, comparing to other scoring functions, weak results in BERTScore are scored up to 0.8 out of 1. So if you combine it with other functions, you’ll get skewed results. To avoid this, you need to normalize its value, for example: x > 0.95 give full contribution (1), 0.75<= x <0.95, scale linearly, and for x < 0.75 drop to 0.

Putting it all together

Putting it all together, you’ll end up with a solution that consists of the following building blocks:

- test scenario based on a parametrized language model prompt

- one or more test cases, each with values for the prompt parameters and several reference values

- weighted score based on several scoring functions

- test runner that:

- runs the test scenario against a language model,

- evaluates, and scores its output

- presents the comparison result

Check out the sample testing workbench that I created and that allows you to evaluate language models running on Ollama. I chose to build it as a Jupyter Notebook using Python, for several reasons:

- Python has ready to use libraries with scoring functions, data manipulation and presentation logic

- Since it’s a fully fledged programming language, I can implement caching the language models and responses for quicker execution

- Jupyter Notebooks:

- allow you to combine code and content with additional information

- can be run interactively

- combined with VSCode extensions such as Data Wrangler allow you to explore the variables in your notebook which is invaluable for debugging and understanding the results

- persist last execution state. If you change something, you can conveniently re-run the notebook from the change part, speeding up the execution

I’m working on a similar version based on OpenAI-compatible APIs, and which will also support Prompty files.

Trust but verify

Next time you’re building an application that uses a language model, be sure to test a few models and compare the results. Don’t just blindly trust generic benchmarks that aren’t representative of how you use language models in your applications, but see what’s working best for you. When you change the model you use or update the prompt, re-run your tests and compare the results. You’ll be surprised to see how much difference a model or a prompt can make. When you start testing how you use language models, not only you’ll see which model works best for you, but you’ll also discover which prompts you could improve. If a model scores well in one test, but poorly in another, and the scenarios aren’t wildly different, try changing the prompt. Stay curious.